Data Analytics

“Data is a precious thing and will last longer than the systems themselves.” – Tim Berners-Lee, inventor of the World Wide Web.

About

Data is a key asset in the modern world. Persisting, migrating and transforming data are often necessary steps to gather information out of your data.

While designing processes and connecting datasources to databases, you should not forget about security, operations, monitoring and all the other recommended best practices in cloud environments.

We as tecRacers are experienced as generalists and have dedicated special teams, ML included, to bring both aspects together.

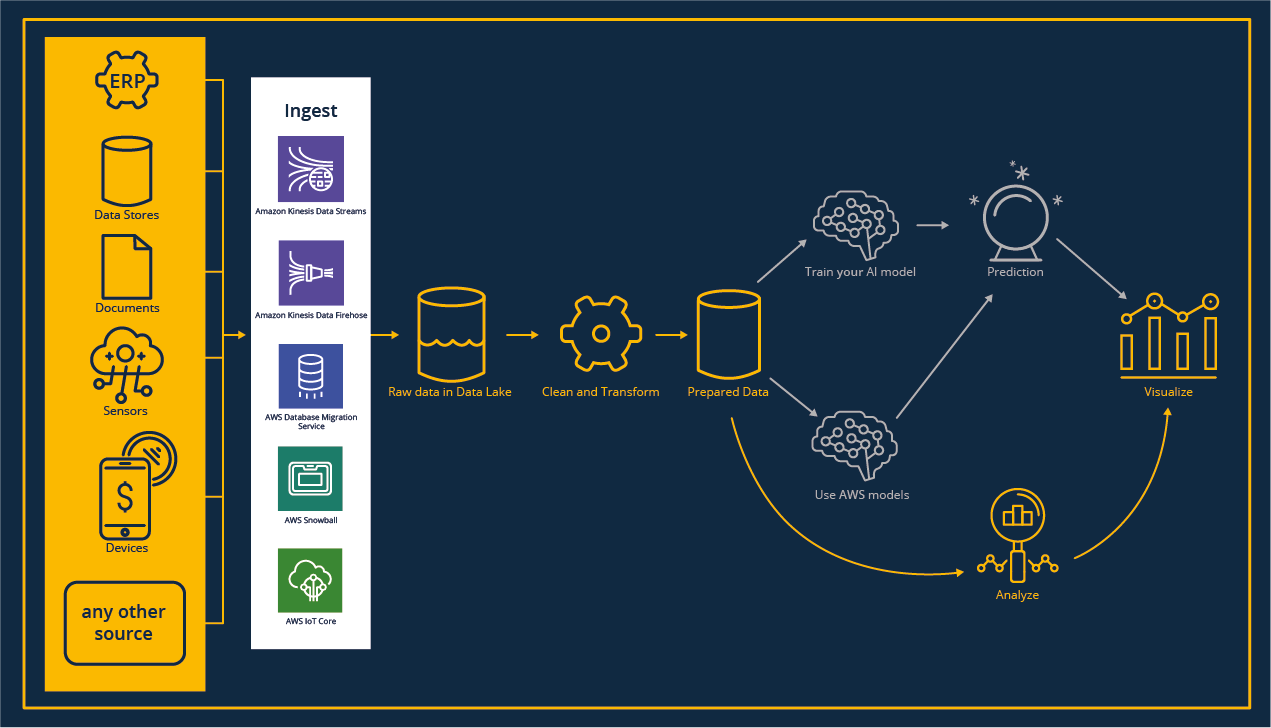

Ingest

We live in a world where data is omnipresent and our systems are overloaded by it.

To make use of it and unfold its potential, we first need to capture, persist and centrally access it. Whether you want to get started with new use cases in the cloud and need to shift existing data initially, or if you want to establish automated pipelines to connect your continuous dataflow to the cloud environment, the process of ingestion is the entry ticket to your workspace.

You can choose from a variety of services the appropriate ones for your streaming, IoT or batch workloads.

Project reference ‘Ingest’

Data Lake

Establishing a central repository for raw information leads to a vast number of possibilities for Data Analytics and Machine Learning use cases.

The Data Lake concept offers a lot of flexibility in creating brand new analyses, exploring data to find correlations between datasets, and serving as a backup in the transformation process when outliers in results need to be interpreted.

If you’re interested in information on Data Lakes, please see our Whitepaper.

Using a data lake is both: A great chance and also a risk for data owners, especially when it comes to GDPR-related data. Building a skillful Data Lake using suitable data formats, structuring data proficiently, and enabling row-level access is something we as tecRacers are well experienced with.

Project reference ‘Data Lake’

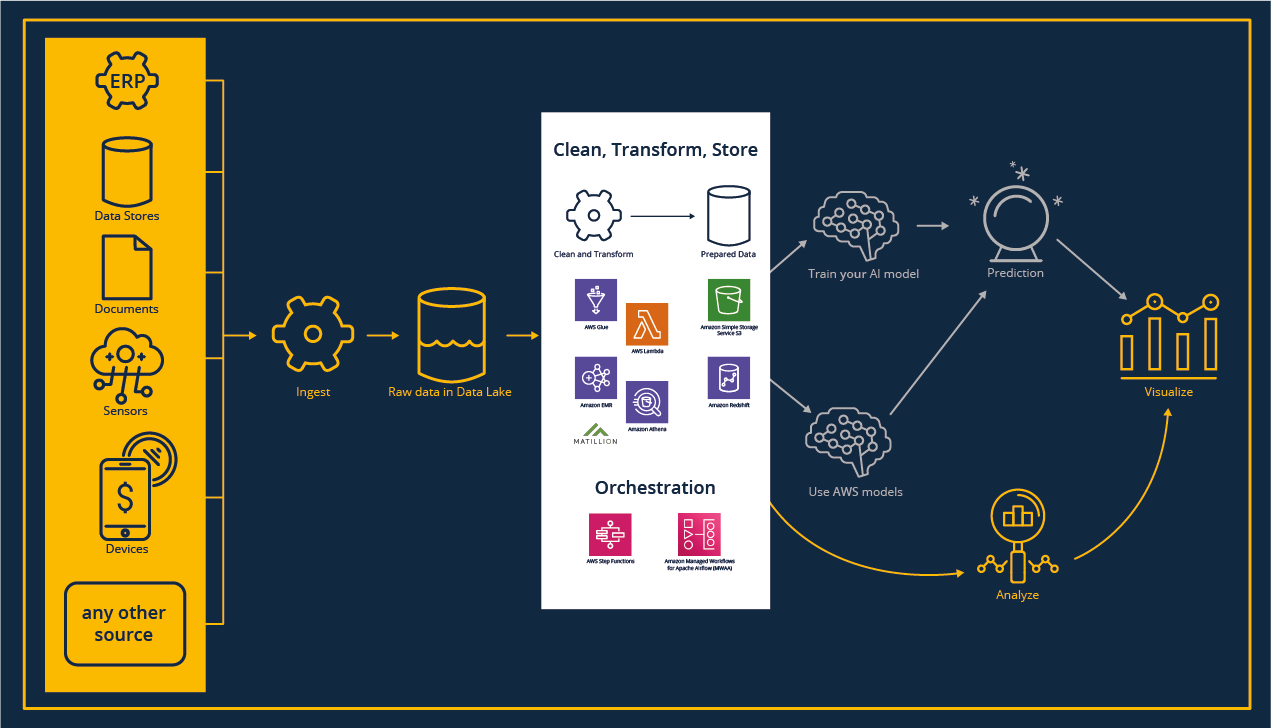

Clean, Transform and Store

The exciting, though most effortful part of the analytics process starts now: Preparing data for visualization or model training.

Graphical tools such as third-party tools like Matillion ETL are available next to classical Apache Hadoop-related workloads using Jupyter Notebooks, e.g., with Apache Spark or serverless functions.

Data transformation is not only challenging in terms of creating a suitable data warehouse model, cleaning and transforming data. It also requires orchestrating varying workloads regarding schedules, order of execution, and infrastructure.

Analyze

For analyses a consistent and business-oriented view is the key.

Different use cases require different access patterns from querying a classical data warehouse to accessing data on an Apache Hadoop cluster to newly and exciting storage options such as using an object storage or combing data between those – everything is possible.

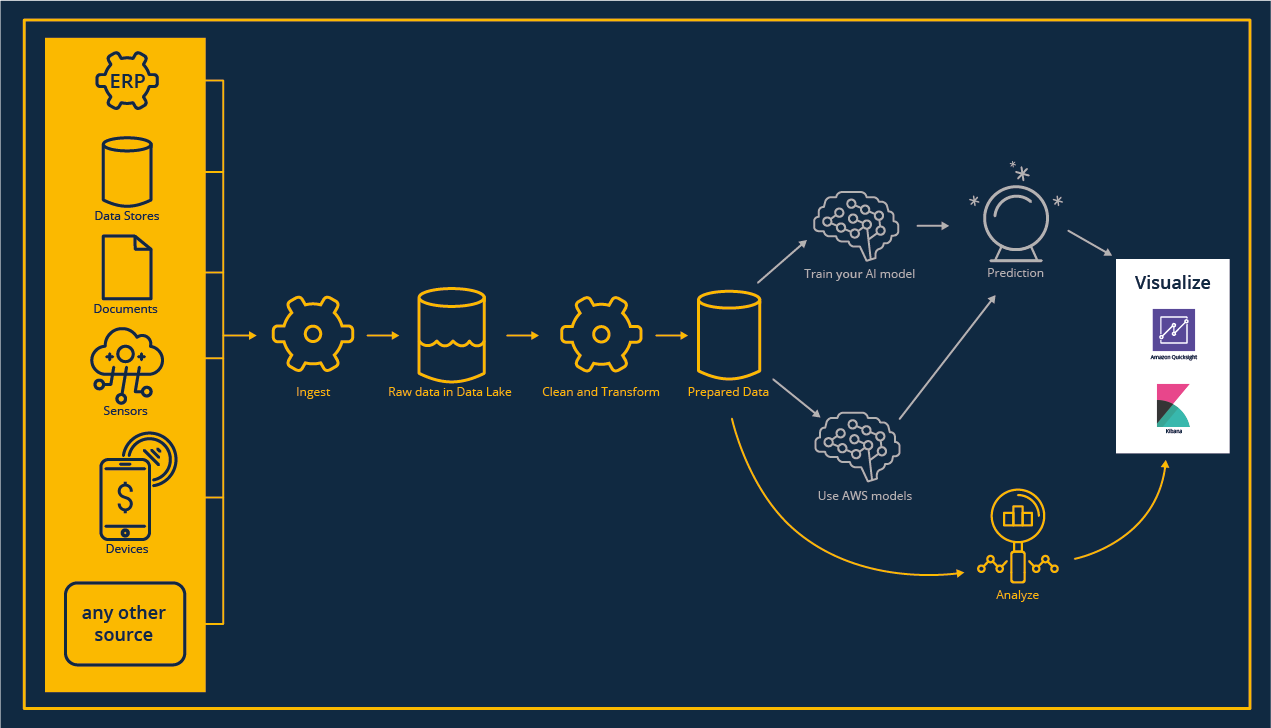

Visualize

The outcoming extracted information need to be humanly accessable, readable and understandable.

In this last step the goal is presenting and understanding the essence of our data. Also real-time tracking via dashboards is a key feature.

What actually is a data mesh?

The process described above gives a critical but incomplete picture of creating analyses.

It represents the technical process of analyzing data. However, organizational structures are neglected. Taking these and the company’s size into account, it can be beneficial to consider data as a product and, therefore, store and process it decentralized instead of filing all raw data in a centralized data lake. This concept is known as “Data Mesh”.

The main difference is that data owners are held responsible for cleaning and providing the data. Consistency and security is controlled by cross-team policies applied to the whole organization. We at tecRacer look forward to helping you choose your best data strategy.