Machine Learning

“What AI (Artificial Intelligence) and machine learning allows you to do is find the needle in the haystack.” – Bob Work

About

Machine Learning is an iterative way to gather insights.

Challenges hide in the content level, but also on a technical level.

While thinking of data analysis and feature engineering up to model metric evaluation, you should not forget about security, operations, monitoring and all the other recommended best practices in cloud environments.

Typically, it requires a defined process to enable an established and repeatable process of developing, maintaining and deploying the models. This process is a combination of Machine Learning (ML) and DevOps and is called MLOps.

We as tecRacers are experienced as generalists and have dedicated special teams, Machine Learning included, to bring both aspects together.

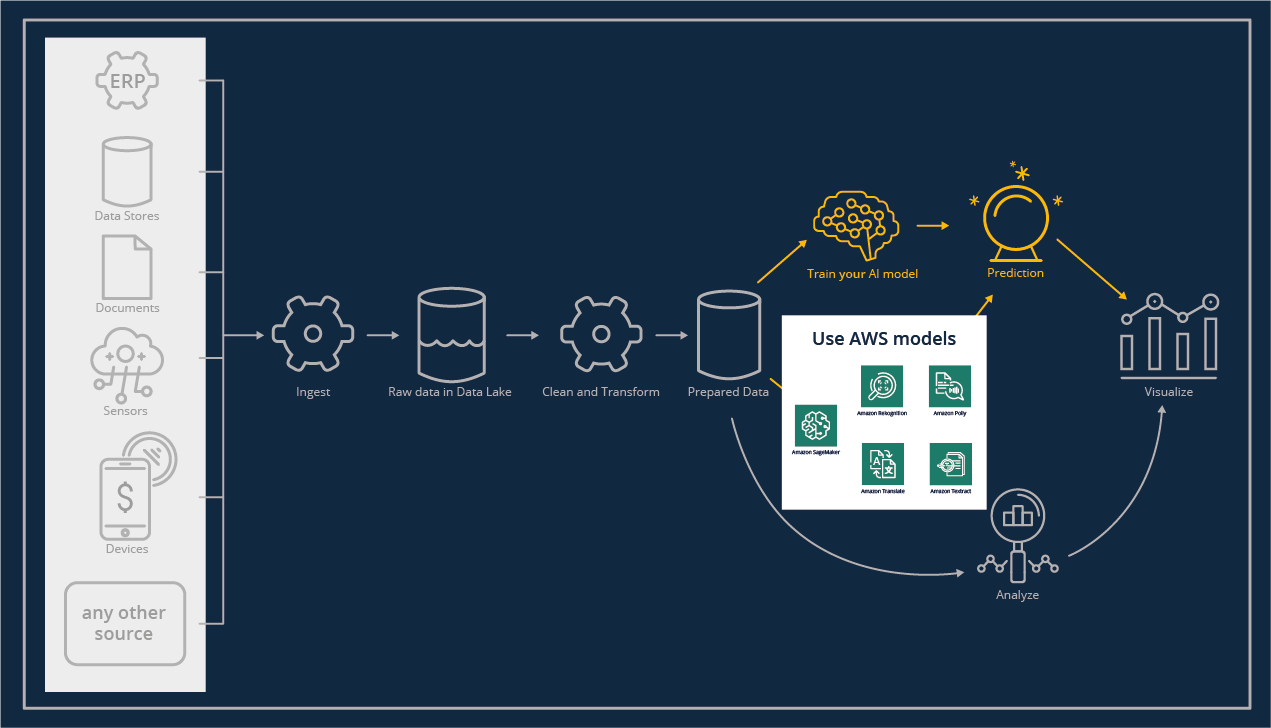

Use AWS AI model

With high level Machine Learning Services we are able to quickly get started and explore the capabilities of machine learning.

There are a bunch of services that deliver a ready-to-use API to explore your data and get immediate results. For general and common problems like image recognition, translating and tagging, these services provide directly precise results without customization needed.

Project reference ‘use AWS AI model’

Hessische Zentrale für Datenverarbeitung

Project goals

Use AWS managed models for common tasks.

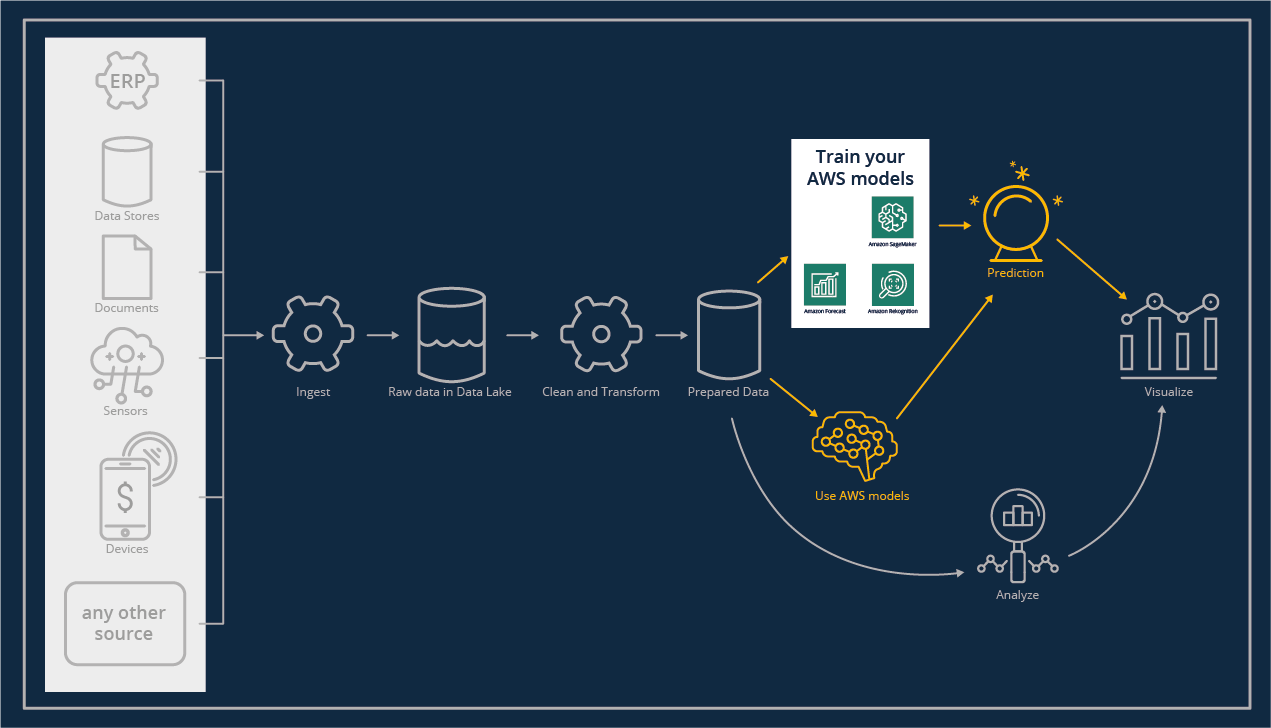

Train your AI model

From a certain complexity and variety, there is no way around training your own models. Both the data and the use case demands may require that the out-of- the-box services from AWS are not specific enough.

Although there are services, which offer training of custom models, perhaps you want to manage, experiment, distribute, and package models on your own.

Here we enter the area of MLOps and especially SageMaker, which comes with well-known frameworks like PyTorch, TensorFlow and many more.

Project reference ‘Train your AI model’

Bayer CropScience

Project goals

Build a unique model, fitting precisely to your usecase.

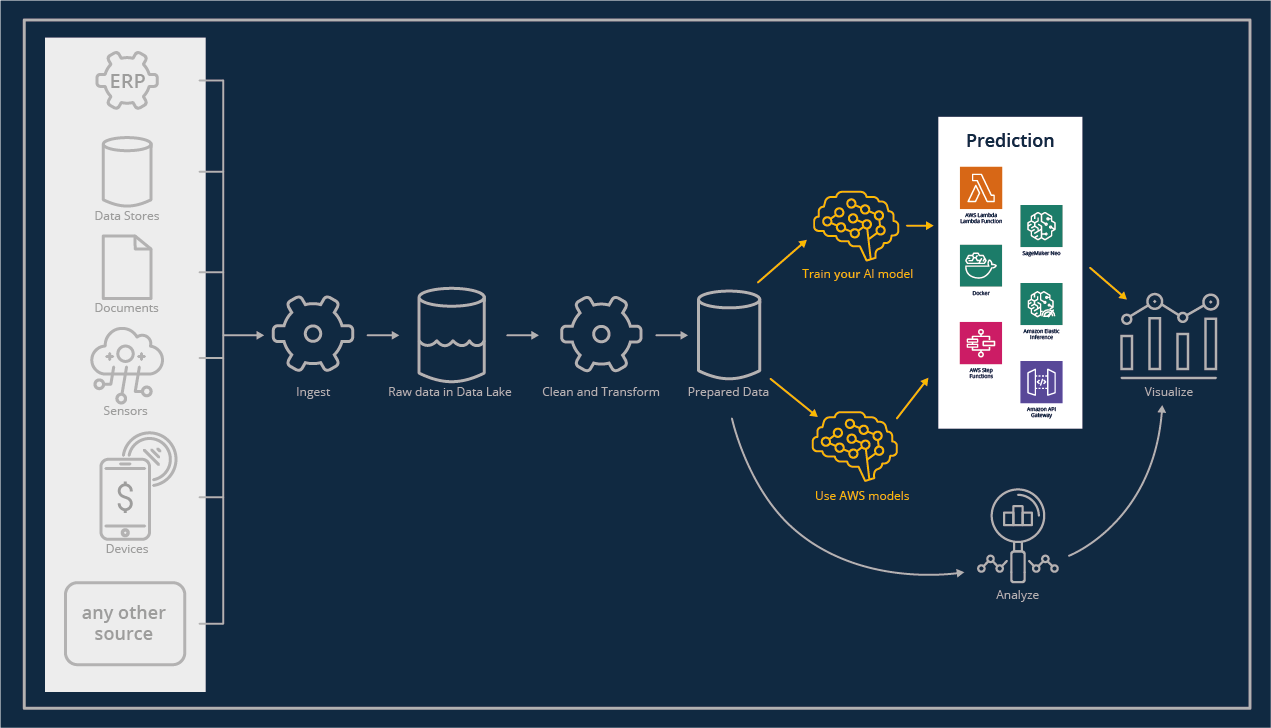

Prediction

Once the exciting work of data scientists is done, where data is analyzed, evaluated and statistical features are extracted, and a model has been trained, evaluated and is ready to run, it’s on to the actual deployment and serving.

Having the control over your model artifacts enables you to transform and compress it, to maybe let it run on edge, in mobile apps, or even on IoT devices. The heavy weight models will run in containers on SageMaker endpoints and latency sensitive application will make use of elastic inference to accelerate real-time requests.

MLOps

AWS offers services that enable companies to run machine learning quickly and flexibly. This enables any enterprise to efficiently build machine learning models for many use cases. These services also simplify infrastructure provisioning and management, machine learning code deployment, automating the model publishing process, and monitoring model and infrastructure performance. The operation of such infrastructure is described by the made-up word “MLOps,” which combines the DevOps notion with that of Machine Learning

DevOps is a combination of mindsets, practices and tools that enable organizations to deliver applications and services faster and easier. It enables them to evolve and improve products in less time than companies that rely on traditional processes for software development and infrastructure management. This speed advantage allows companies to better serve their customers and compete more effectively in the marketplace.

With MLOps, machine learning models and their performance can be developed, deployed and monitored faster, easier, repeatable and more fault-tolerant. Our experienced consultants are happy to help define and implement this process.