AWS Cost Management Made Easy: Build Your Own Calculator with the AWS Pricing API

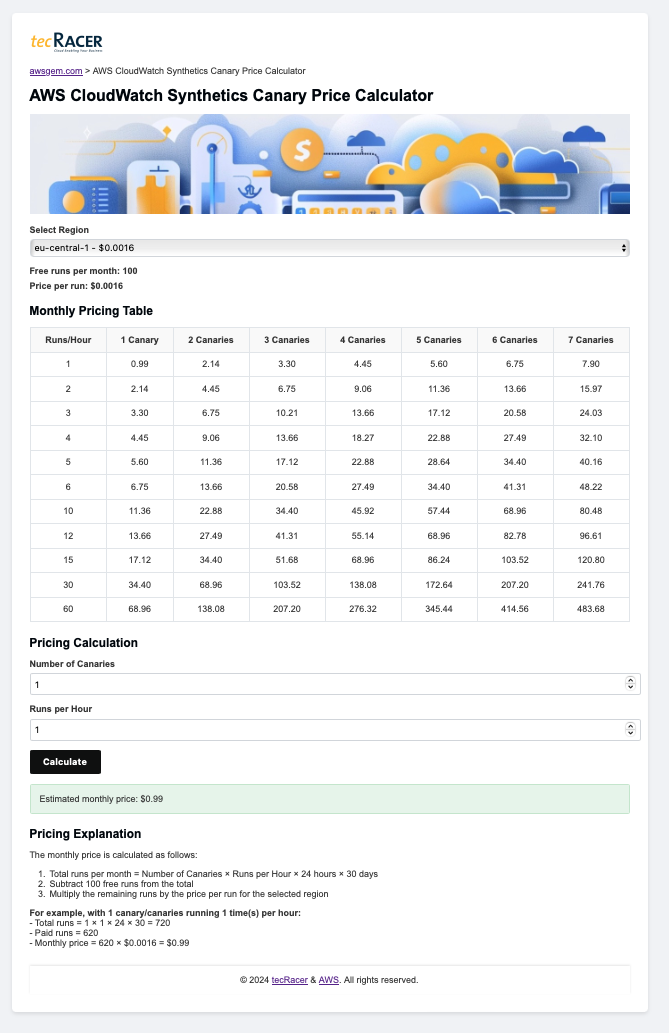

When discussing AWS pricing with customers, limitations in the official AWS Pricing Calculator can sometimes hinder accurate cost estimations. This blog post addresses a specific challenge encountered with AWS CloudWatch Synthetics pricing and provides a solution by building a custom calculator using the AWS Pricing API.

CloudWatch Synthetics is an AWS service that allows you to create canaries, which are configurable scripts that run on a schedule to monitor your endpoints and APIs. One of the main challenges we faced was the need to easily compare different setups of CloudWatch Synthetics, specifically defining the number of runs per hour and generating a comparison table—features not available in the AWS Calculator.

By following this guide, you’ll learn how to create tailored calculators for any AWS service, similar to those found at AWS Canary Calculator or Vantage Instances. Our custom solution allows for more flexible configurations and side-by-side comparisons, enabling better decision-making when it comes to choosing the most cost-effective setup for your monitoring needs.

The working example for this blog can be found here: AWS Canary Calculator. With the information provided in the blog, you can build it on your own or apply the concepts to create a calculator for your specific use case.

Prerequisites

Before we dive into the implementation, ensure you have the following:

- An AWS account with appropriate permissions to:

- Create and manage Lambda functions

- Access the AWS Pricing API

- Create and write to S3 buckets

- Set up CloudFront distributions

- Manage Route 53 domains (if using a custom domain)

- Basic knowledge of:

- Python programming

- AWS Lambda

- AWS S3

- HTML, CSS, and JavaScript for front-end development

- AWS CLI installed and configured on your local machine

- A code editor of your choice

- (Optional) A registered domain name if you plan to host the calculator on a custom domain

With these prerequisites in place, you’re ready to start building your custom AWS pricing calculator.

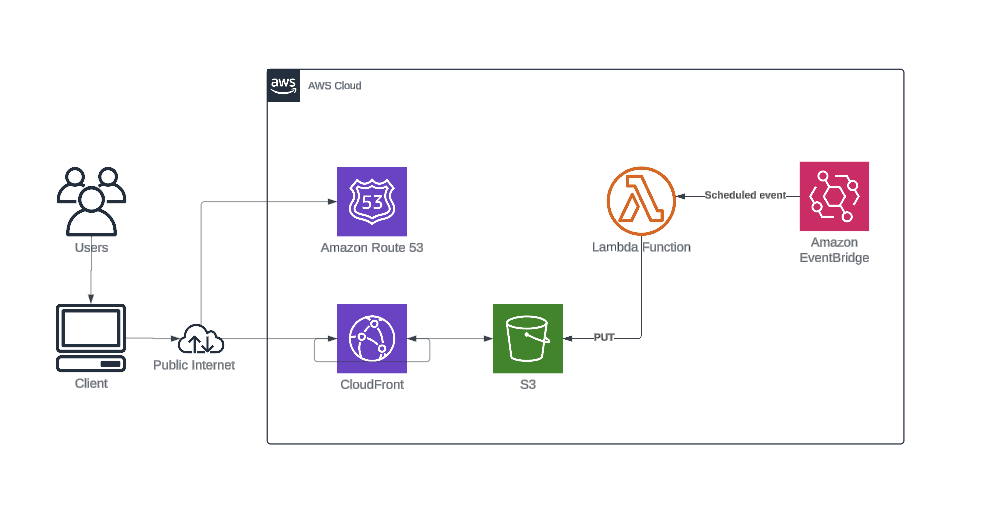

Architecture Overview

To create a robust and scalable solution for fetching and displaying AWS pricing information, we’ll use a combination of AWS services. Here’s a high-level overview of the architecture:

- AWS S3 (Simple Storage Service)

- Hosts the static website and stores the JSON file with pricing data.

- AWS CloudFront

- Distributes content globally with low latency and high transfer speeds.

- AWS Route 53

- Manages the domain name for the static website.

- AWS Lambda

- Fetches and processes pricing data from the AWS Pricing API.

- AWS EventBridge

- Triggers the Lambda function on a daily schedule.

Workflow

-

Static Website Hosting:

- The static website, including all HTML, CSS, and JavaScript files, is hosted in an S3 bucket.

- CloudFront is configured to serve the content from the S3 bucket, ensuring fast and secure access.

-

Domain Name Management:

- Route 53 is used to manage the domain name and DNS entries for the website, allowing users to access it via a custom URL.

-

Fetching and Storing Pricing Data:

-

The Lambda function is triggered daily by an EventBridge scheduled event.

-

The Lambda function queries the AWS Pricing API to fetch the latest pricing information for AWS Synthetic Canaries.

-

The raw data is processed to extract relevant pricing details, which are then stored as a JSON file in the S3 bucket (s. http://awsgem.com/canarycalc/prices.json ):

{ "ap-southeast-2": {"USD": "0.0017000000"}, "cn-north-1": {"CNY": "0.0178000000"}, "sa-east-1": {"USD": "0.0022000000"}, "eu-central-1": {"USD": "0.0016000000"}, "us-east-1": {"USD": "0.0012000000"} ... }

-

Implementation Guide

Setting Up the Lambda Function

This Lambda function retrieves pricing information for AWS CloudWatch Synthetics Canaries across different regions using the AWS Pricing API. It then processes this data and stores it in an S3 bucket for future use.

Before implementing this function, ensure you have:

- Appropriate AWS permissions

- A Lambda function set up with the correct IAM role

- Necessary permissions for the IAM role:

- Access to the Pricing API

- Write access to S3

- Ability to create CloudWatch logs

- S3 bucket policy adjusted to allow Lambda function write access

Code Walkthrough

The complete code for the Lambda function you can find here https://github.com/vidanov/tecracer-blog-projects/tree/main/aws-calculator/lambda

Let’s break down the Lambda function code into manageable sections:

1. Imports and Setup

import json

import boto3

import os

These lines import the required libraries:

json: For parsing and creating JSON databoto3: The AWS SDK for Pythonos: For accessing environment variables

2. Helper Function: extract_prices

def extract_prices(data):

prices_per_region = {}

for item in data:

product = item['product']

region_code = product['attributes']['regionCode']

terms = item['terms']['OnDemand']

for term_value in terms.values():

for price_value in term_value['priceDimensions'].values():

price = price_value['pricePerUnit']

prices_per_region[region_code] = price

return prices_per_region

This function processes the raw pricing data:

- It iterates through each item in the data

- Extracts the region code and pricing information

- Creates a dictionary with region codes as keys and prices as values

3. Lambda Handler

def lambda_handler(event, context):

service_code = 'AmazonCloudWatch'

pricing_client = boto3.client('pricing', region_name='us-east-1')

filters = [

{"Type": "TERM_MATCH", "Field": "serviceCode", "Value": service_code},

{"Type": "TERM_MATCH", "Field": "productFamily", "Value": "Canaries"}

]

response = pricing_client.get_products(

ServiceCode=service_code,

Filters=filters,

FormatVersion='aws_v1',

MaxResults=100

)

The lambda_handler function is the entry point for the Lambda:

- It sets up the service code for CloudWatch

- Creates a pricing client (note: the Pricing API is only available in

us-east-1) - Defines filters to get specific pricing data (CloudWatch Canaries)

- Makes an API call to get the pricing products

4. Processing the Response

price_list = [json.loads(price_item) for price_item in response['PriceList']]

prices = extract_prices(price_list)

These lines process the API response:

- Convert each item in

PriceListfrom a JSON string to a Python dictionary - Use the

extract_pricesfunction to get a simplified price dictionary

5. Storing Results in S3

s3_client = boto3.client('s3')

bucket_name = os.getenv('s3_bucket')

file_name = 'canarycalc/prices.json'

s3_client.put_object(

Bucket=bucket_name,

Key=file_name,

Body=json.dumps(prices)

)

print(f'Prices saved to S3 bucket {bucket_name} with file name {file_name}')

Finally, the function stores the processed data:

- Create an S3 client

- Get the bucket name from an environment variable

- Define the file path and name

- Upload the JSON data to S3

- Print a confirmation message

Output

The resulting JSON file in S3 will have a structure like this:

{

"ap-southeast-2": {"USD": "0.0017000000"},

"us-east-1": {"USD": "0.0012000000"},

// ... other regions ...

}

This format allows for easy consumption by web applications or other services that need regional pricing information for CloudWatch Synthetics Canaries.

Deployment and Testing

- Create a new Lambda function in the AWS Console.

- Copy the code into the function editor.

- Set the environment variable

s3_bucketwith your S3 bucket name. - Configure an EventBridge rule to trigger the function daily.

- Test the function manually to ensure it works as expected.

Front-end Integration

To use the pricing data in a front-end calculator, you can create a basic HTML/JavaScript application. The html, javasript, css files and images for the AWS CloudWatch Synthetics Canary Calculator you can find here: https://github.com/vidanov/tecracer-blog-projects/blob/main/aws-calculator/

Troubleshooting

Common issues and solutions:

- S3 permission denied: Check the Lambda execution role permissions.

- Pricing API errors: Verify the service code and filters are correct.

Resources

- Official AWS pricing calculator

- tecRacer AWS CloudWatch Synthetics Canary Price Calculator

- https://instances.vantage.sh

- AWS Synthetic monitoring (canaries)

- Use an Amazon CloudFront distribution to serve a static website

- AWS Web Service Pricing API for Python

What else

-

The pricing of AWS is very comprehensive and complex. We have shown here a very basic example. You need to explore the JSON responses for the service you want to estimate the prices and implement logic that considers factors like saving plans or the free tier.

-

You can implement similar functionality using spreadsheets like Excel or Google Sheets, or other solutions where you can integrate pricing APIs into your existing cost planning tools. This flexibility allows you to adapt the cost management strategies to your specific needs and tools.

-

FinOps encompasses much more than pre-cost estimations. Using tools like the AWS Pricing Calculator and pricing pages is just a small example and a building block for your successful cost planning.

-

Explore tools like terracost or the Well-Architected Labs Cloud Intelligence Dashboards.

-

Learn more by enrolling in our FinOps Training courses.

Conclusion

Building a custom AWS pricing calculator using the AWS Pricing API offers flexibility and accuracy in cost estimation. This approach can be extended to any AWS service, enabling you to create tailored solutions that meet your specific needs.

By leveraging serverless architecture and automating data updates, you can maintain an up-to-date and cost-effective pricing tool. As AWS services and pricing models evolve, your custom calculator can easily adapt, ensuring you always have accurate cost estimates at your fingertips.

Remember to keep your solution updated with the latest AWS pricing changes and consider contributing your improvements back to the community. Happy calculating!

– Alexey