Hands on: Migrating from PostgreSQL to MySQL with the AWS Database Migration Service (DMS)

This content is more than 4 years old and the cloud moves fast so some information may be slightly out of date.

Hello, today we’ll take a look at how to migrate from PostgreSQL to MySQL using AWS DMS, the database migration service of AWS.

This is a hands on article, so we’ll be light on theory and start by preparing databases for our source and targets with some sample data. Afterwards we’ll set up the DMS instance and then configure our migration and transformation job. I’ll also point out some of the things to keep in mind for your own migrations along the way. Let’s get started.

There can’t be a database migration without databases

We need a PostgreSQL database as source and a MySQL database as target. In case you already have both databases available, you can skip to the Preparing databases section.

First we’re going to prepare the source database on an EC2 instance and then we’ll set up the target database.

Building the source database

- Create an EC2 instance using Amazon Linux 2

- Add a Security group to your instance that allows to ports 22. To reduce security risks, you may want to only allow port 22 communication from your IP. We’ll come back later to the security group once we have an IP address for our replication instance.

- Connect to your instance using ssh

Postgres are you there?

-

Install PostgreSQL (or let it run inside docker ^_^ ). The following script will install Docker on your instance and start a PostgreSQL server inside a container with the username and password set to

postgres.# Install and enable docker as a service sudo yum install docker -y sudo systemctl enable docker sudo systemctl start docker # Create a directory for the data volume mkdir ~/postgres_data -p # Run the container and expose the # database port on our instance sudo docker run -d \ --name postgres_container \ -p 5432:5432 \ -e POSTGRES_PASSWORD=postgres \ -e PGDATA=/var/lib/postgresql/data/pgdata \ -v ~/postgres_data/:/var/lib/postgresql/data \ --restart unless-stopped \ postgres:12 -

Start the postgres client with

sudo docker exec -it postgres_container psql -U postgres -

Populate your PostgreSQL database with the following sample table. Inspired from TutorialsPoint.

CREATE TABLE COMPANY( ID INT PRIMARY KEY NOT NULL, NAME TEXT NOT NULL, AGE INT NOT NULL, ADDRESS CHAR(50), SALARY REAL, JOIN_DATE DATE ); INSERT INTO COMPANY (ID,NAME,AGE,ADDRESS,SALARY,JOIN_DATE) VALUES (1, 'Paul', 32, 'California', 20000.00,'2001-07-13'); INSERT INTO COMPANY (ID,NAME,AGE,ADDRESS,JOIN_DATE) VALUES (2, 'Allen', 25, 'Texas', '2007-12-13'); INSERT INTO COMPANY (ID,NAME,AGE,ADDRESS,SALARY,JOIN_DATE) VALUES (3, 'Teddy', 23, 'Norway', 20000.00, DEFAULT ); INSERT INTO COMPANY (ID,NAME,AGE,ADDRESS,SALARY,JOIN_DATE) VALUES (4, 'Mark', 25, 'Rich-Mond ', 65000.00, '2007-12-13') , (5, 'David', 27, 'Texas', 85000.00, '2007-12-13');

Now that we’ve successfuly taken care of the source database, let’s work on getting the target database set up.

Building the target database

Note: You can also install the mysql database on the same instance containing the postgres database. In that case skip the steps 1 and 2.

-

Create another EC2 instance using Amazon Linux 2

-

Add a Security group to your instance that allows connections to ports 22. To reduce security risks, you may want to only allow port 22 communication from your IP. We’ll come back later to the security group once we have an IP address for our replication instance.

-

Connect to your instance using ssh

-

Install MySQL (or let in run inside docker ^_^ ). The following script will start a MySQL instance inside docker with root password

mysql.# Install and enable docker as a service sudo yum install docker -y sudo systemctl enable docker sudo systemctl start docker # Create a directory for the data volume mkdir ~/mysql_data -p # Run the container and expose the # database port on our instance sudo docker run -d \ --name mysql_container \ -p 3306:3306 \ -e MYSQL_ROOT_PASSWORD=mysql \ -v ~/mysql_data:/var/lib/mysql \ --restart unless-stopped \ mysql:8.0

That’s it for the first step. We now have both our databases up and running!

Preparing databases

Assuming your databases are up and running, let’s see what is required to have them ready for DMS

Source (PostgreSQL) database prerequisites

At the time of writing this, AWS DMS only support PostgreSQL 9.x (starting at 9.4) through 12.x as the source database.

In case you are migrating from PostgreSQL 10.x please have a look at the extra steps required for this version in the AWS DMS recommendations for PostgreSQL v10.

For other supported version, the preparation steps are as follows:

-

Install a text editor if not available on your server/container:

sudo docker exec -it postgres_container sh -c "apt update && apt install nano" -

Open your

pg_hba.confconfiguration file:sudo docker exec -it postgres_container nano "/var/lib/postgresql/data/pgdata/pg_hba.conf" -

Add the following lines at the end of the previously opened file (

pg_hba.conf) (You can save/exit in nano withCtrl + X,Y,Enter):# Allow users to establish connections from any host host all all 0.0.0.0/0 md5 # Allow replication connections from any host, for user aws_dms host replication aws_dms 0.0.0.0/0 md5This allows connections from anywhere and replication connections from anywhere for the user

aws_dms. The ip-address (to be known later) of the replication instance could be used instead of of0.0.0.0/0however it is fine as the ip-based restriction will be set in the security-group later once the ip address is known. -

Open your

postgresql.confconfiguration filesudo docker exec -it postgres_container nano "/var/lib/postgresql/data/pgdata/postgresql.conf" -

Apply the following modifications to the file

postgresql.conf(You can search in nano withCtrl + W):- Set

wal_leveltological(without quotes). - Set

max_replication_slotsto a value greater than 1. - Set

max_wal_sendersto a value greater than 1. - Set

wal_sender_timeoutto 0.

- Set

The wal_sender_timeout parameter terminates replication connections that are inactive longer than the specified number of milliseconds.

Although the default is 60 seconds, AWS recommends to set this parameter to zero, which disables the timeout mechanism.

-

Restart the postgres database (or the container inside which it is running)

sudo docker restart postgres_container -

Start the postgres client with

sudo docker exec -it postgres_container psql -U postgres -

Create a user with read access to the source schemas/tables. Grant Superuser+Replication privileges if you want Change Data Capture too (our case):

CREATE ROLE aws_dms LOGIN REPLICATION SUPERUSER PASSWORD 'aws_dms_password'; GRANT USAGE ON SCHEMA PUBLIC TO aws_dms; GRANT SELECT ON ALL TABLES IN SCHEMA PUBLIC TO aws_dms;

Target (MySQL) database prerequisites

We need to create credentials (user: aws_dms, password: aws_dms_password) and a schema (aws_dms_schema) that we will pass to aws dms.

It is possible to use existing credentials and schemas.

We start mysql in our container (the root password for our mysql in container is mysql):

sudo docker exec -it mysql_container mysql -p

And enter the password: mysql.

Once we are connect we can run the following command to create desired schema and a user with enough privileges to use it.

CREATE USER 'aws_dms'@'%' IDENTIFIED BY 'aws_dms_password';

CREATE SCHEMA aws_dms_schema;

GRANT ALTER, CREATE, DROP, INDEX, INSERT, UPDATE, DELETE, SELECT ON aws_dms_schema.* TO 'aws_dms'@'%';

GRANT ALL PRIVILEGES ON awsdms_control.* TO 'aws_dms'@'%';

Prepare the migration in AWS/DMS

Get the roles and permissions ready

AWS DMS requires some specific roles: dms-vpc-role, dms-cloudwatch-logs-role.

In case you use Amazon Redshift as a target database dms-access-for-endpoint is required as well.

These roles are normally created when using DMS with the AWS Console.

If you happen to use the cli or if for whatever reason they aren’t created, please refer to the documentation for instructions on how to create them.

You need source and target endpoints

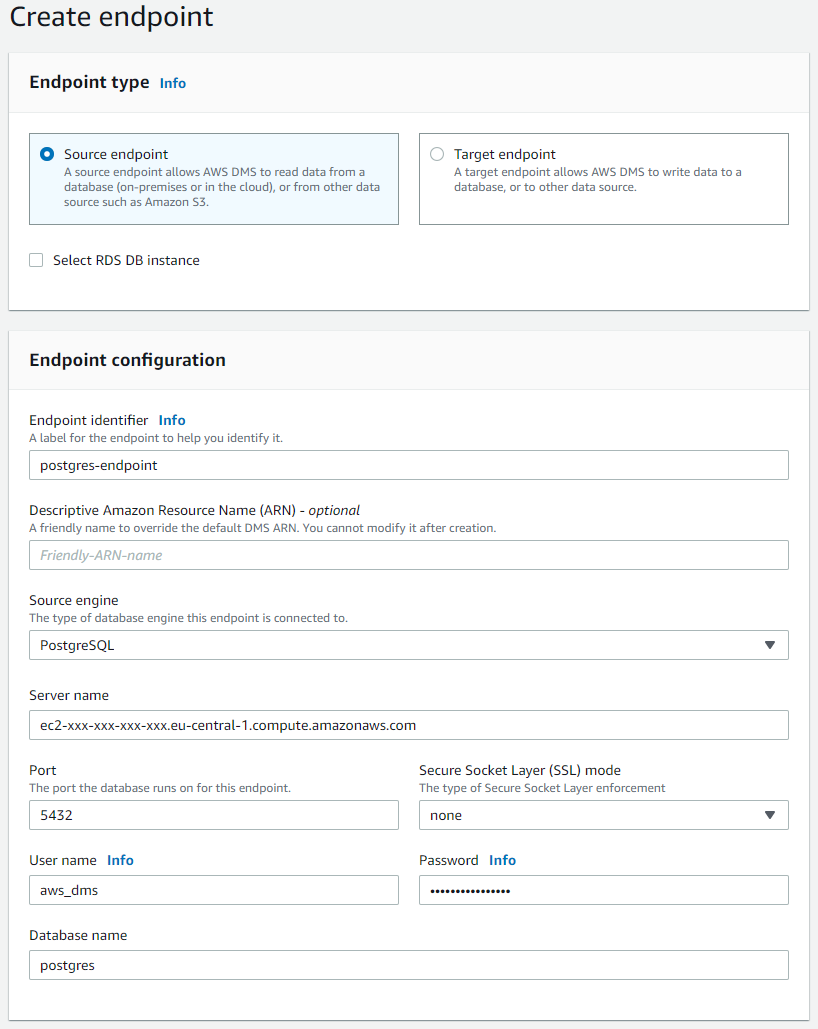

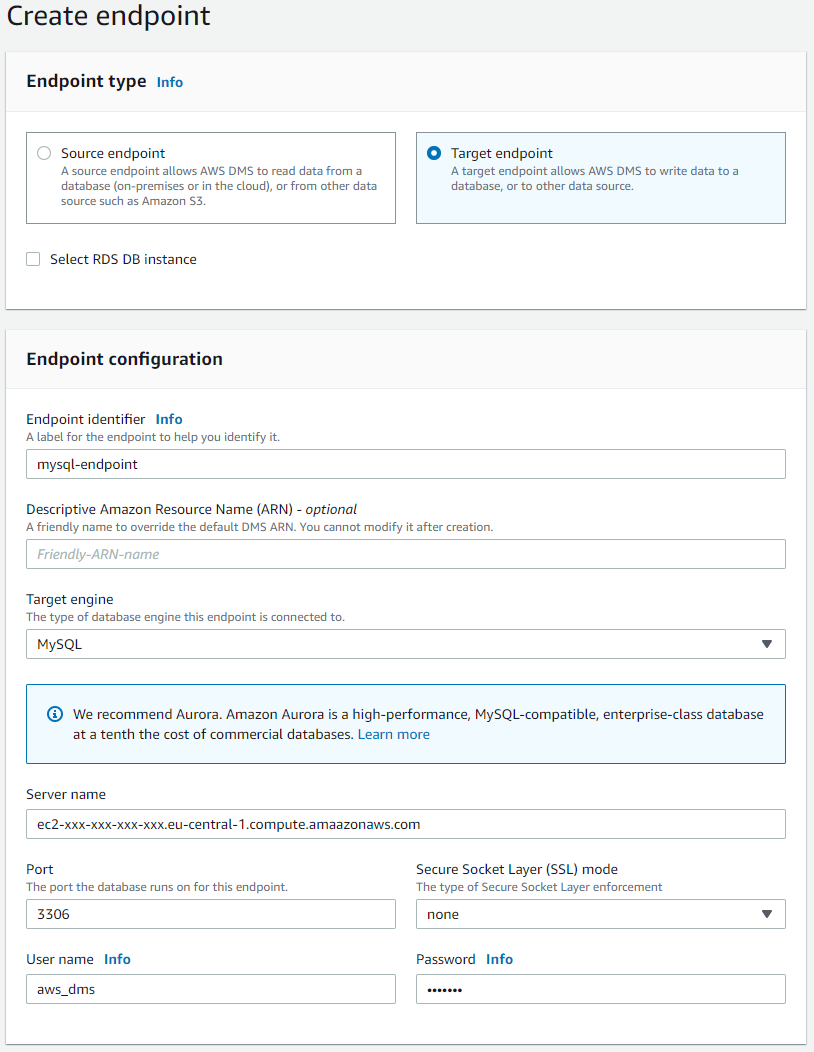

Get into AWS DMS and create a source endpoint for your PostgreSQL database and a target endpoint for your MySQL database. The source endpoint should look as follows:

The target endpoint should look as follows:

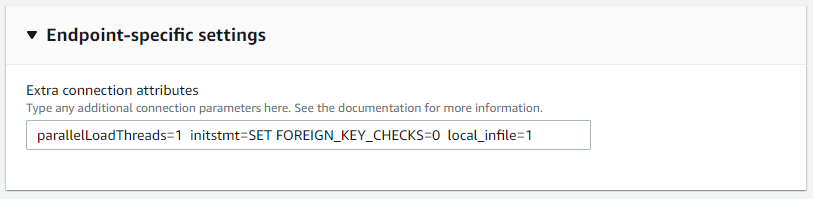

Make sure to set the following settings for your target (MySQL) endpoint:

parallelLoadThreads=1 initstmt=SET FOREIGN_KEY_CHECKS=0 local_infile=1

parallelLoadThreads defines how many threads should be used to load the data into the target MySQL database. A low number of threads is recommended as each thread requires its own database connection.

initsmt=SET FOREIGN_KEY_CHECKS disables foreign key checks during the full load.

local_infile=1 lets AWS DMS know it should load data into the target database.

More information about the configurations of a MySQL compatible database are available here.

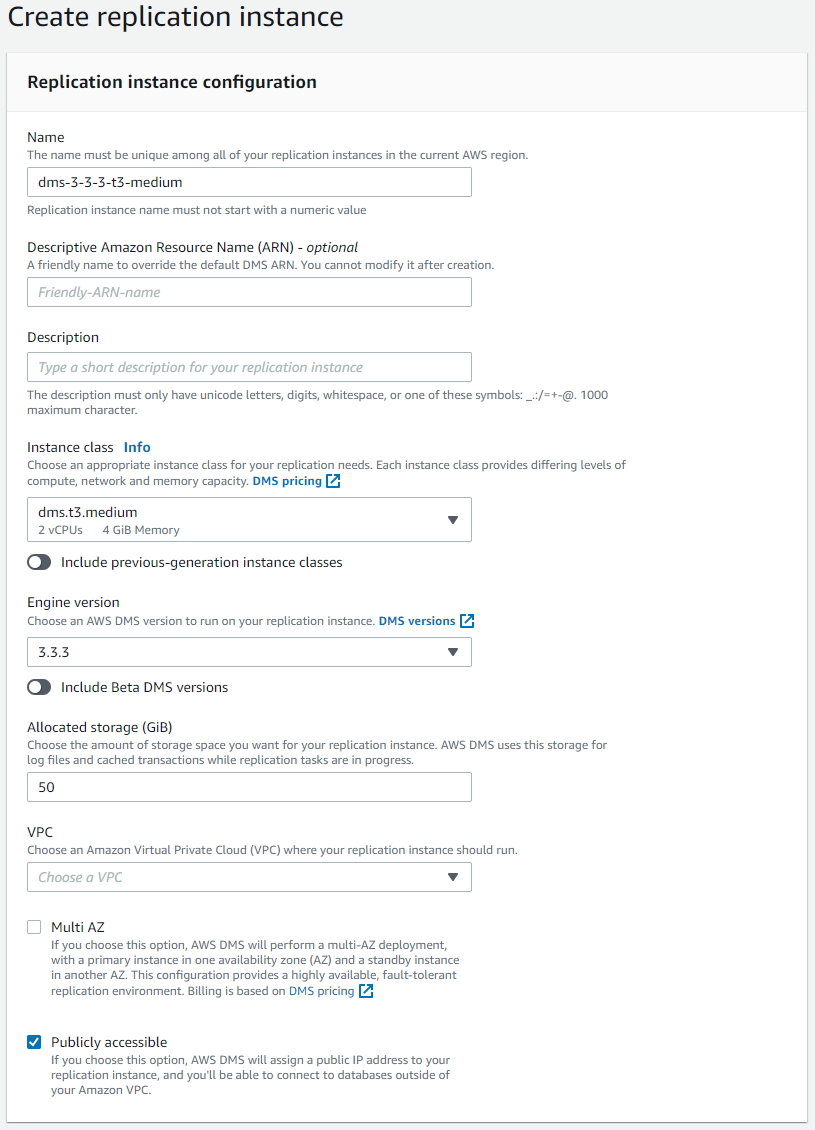

Create a replication instance

Make sure to select a VPC and an instance with enough memory.

The selected DMS version should be adequate for your database version (e.g. DMS>=3.3.1 for MySQL 8.0 and DMS==3.3.3 for PostgreSQL 12.x) we chose DMS version 3.3.3.

Check or uncheck the checkbox Publicly available depending on whether or not your databases are able to communicate with the replication over a private network.

Since we now have an IP address for our replication instance, we can now go back to the security groups of our PostgreSQL and MySQL servers (EC2 instances).

First, we allow incoming traffic from the replication instance at port 5432 to the security group of our PostgreSQL database.

Then, we allow incoming traffic from the replication instance at port 3306 to the security group of our MySQL database.

Now we’ve finished all the prerequisite steps and can finally create our replication task.

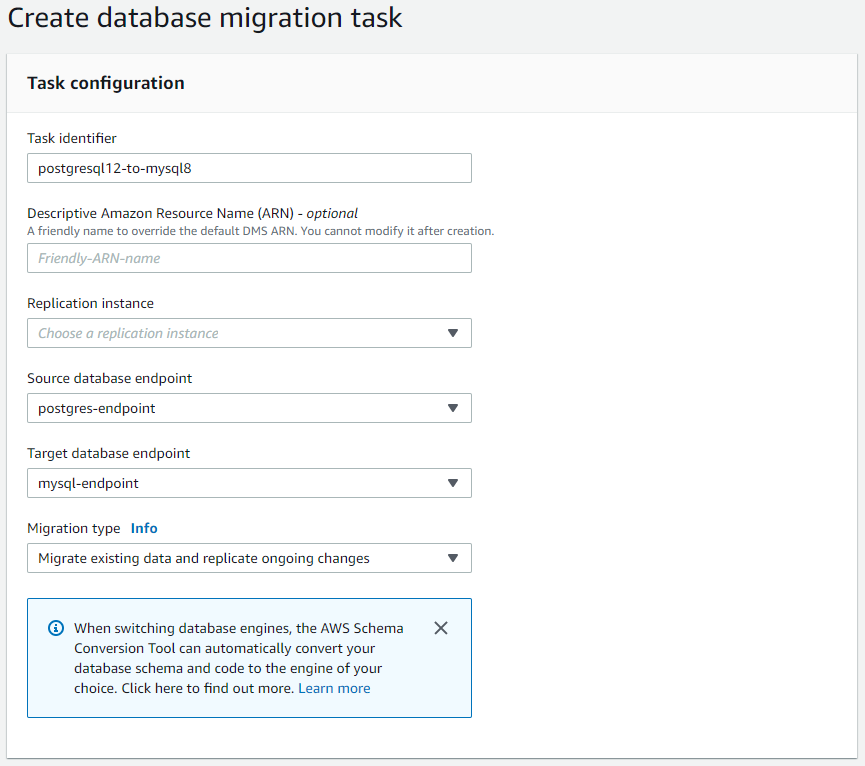

Create a migration task

Create a migration task and select the previously created replication instance, source and target endpoints. We are going to migrate our existing data and replicate ongoing changes.

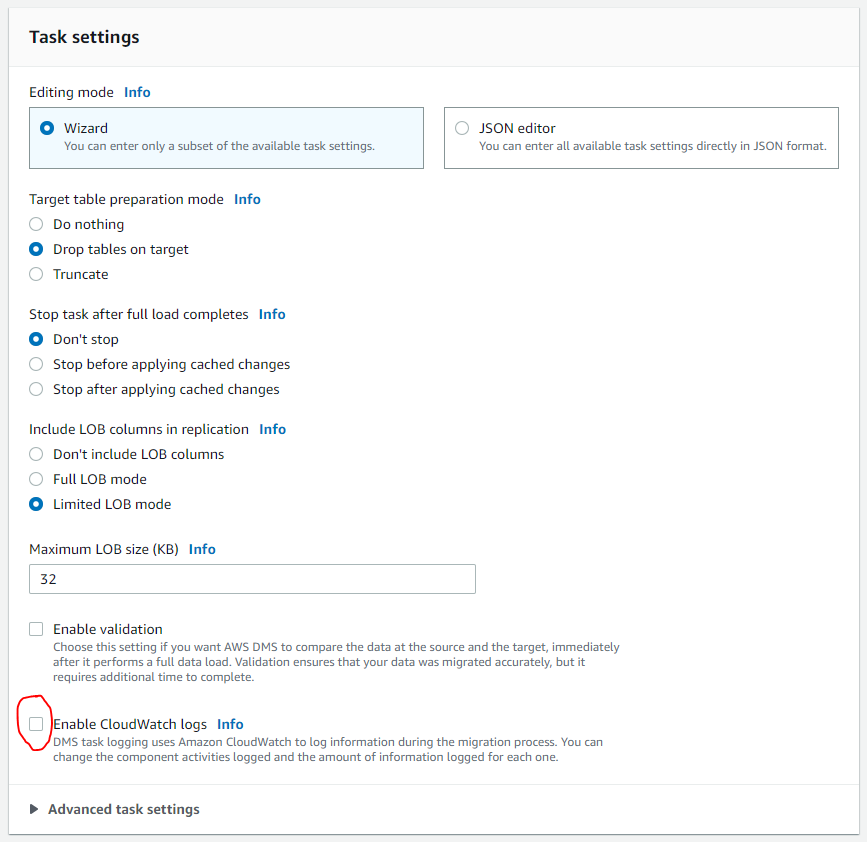

Make sure to enable CloudWatch logs, as the system may otherwise silently fail e.g. when unable to write into the target database. The remaining settings can be left untouched.

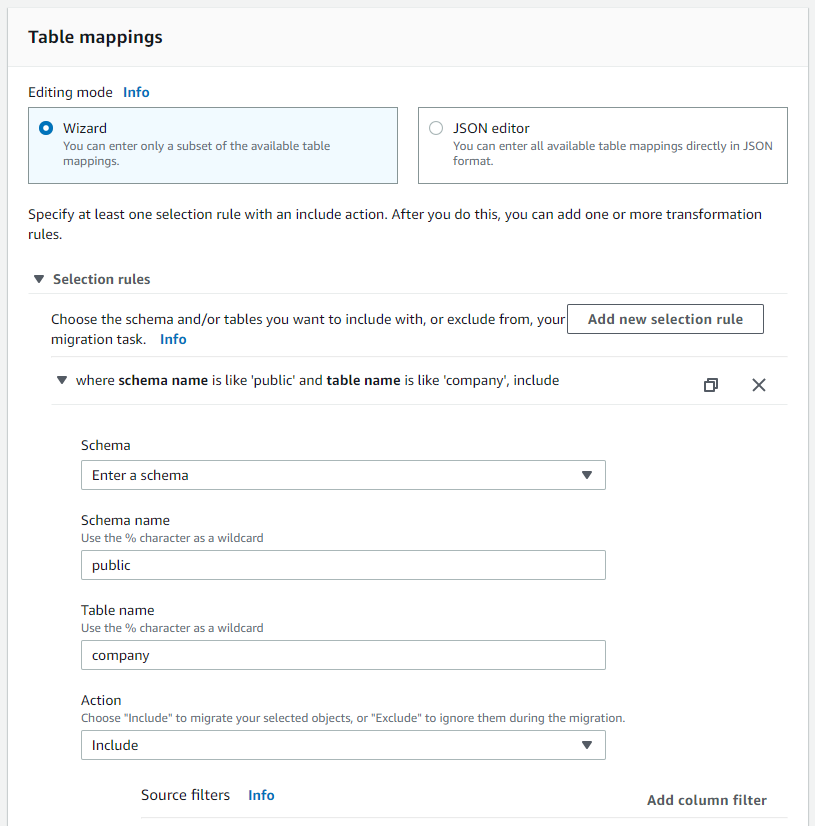

At least one INCLUDE rule is required.

In our case, we want to include the table company from our schema public.

This is also where you’d create your own mapping and migration settings.

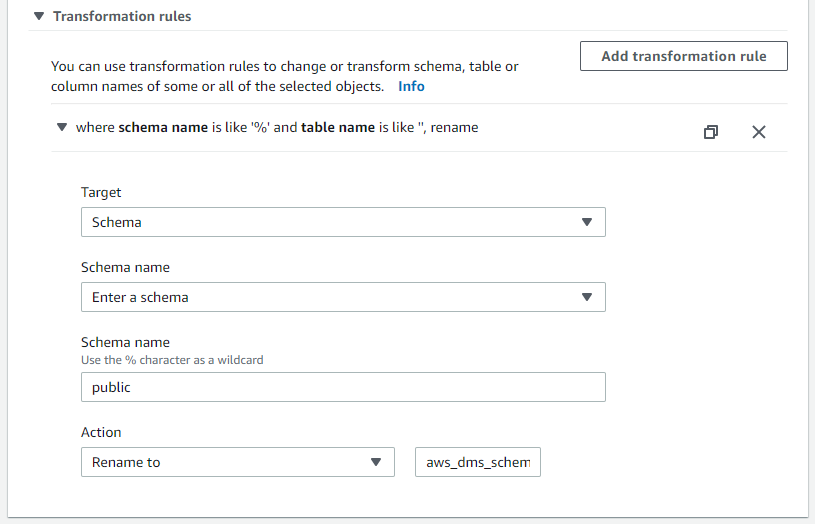

The source schema public will then be replicated as the target schema aws_dms_schema in the MySQL database.

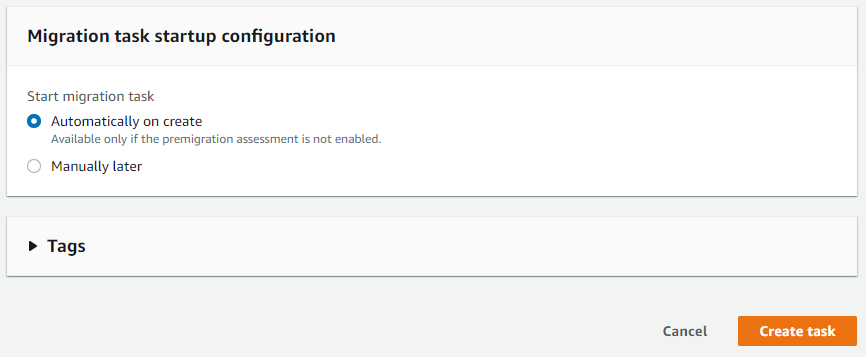

Leaving the following option checked will start the migration as soon as you create it

Et voilà

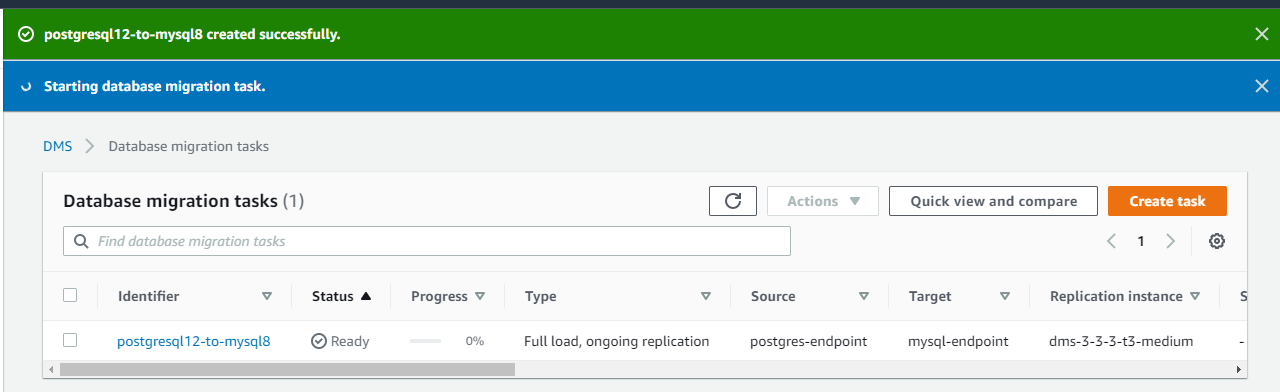

The migration task should have started directly upon creation.

Note: In case the task doesn’t start and notifies an error with the endpoints, check the endpoints configuration once again and run a connection test on both of them.

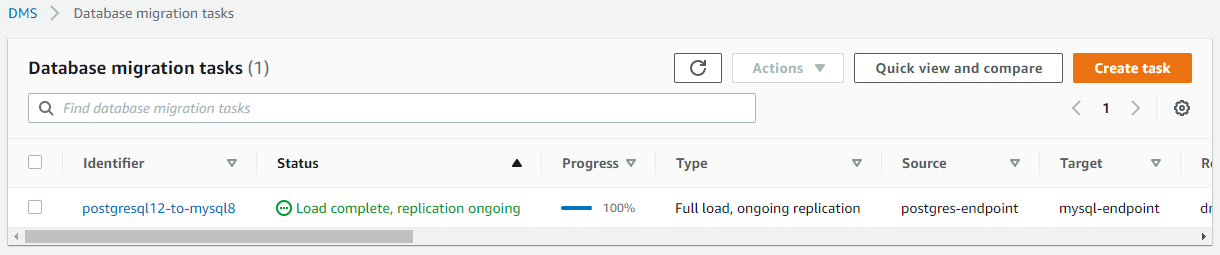

After a while the task status should switch to Load complete, replication ongoing.

Conclusion

AWS database migration service (DMS) can help you achieve your database migration with virtually no downtime. This post presented the case of an heterogeneous migration from PostgreSQL to MySQL but AWS DMS supports more sources for data migration and even more targets for data migration.

I recommend you check out the documentation to learn more about supported sources and targets for data migration.

For simplicity’s sake we used simple passwords and even reused some. In practice you should make sure to always use strong and unique passwords.