Enforcing encryption standards on S3-objects

This content is more than 4 years old and the cloud moves fast so some information may be slightly out of date.

We recently had a discussion internally on how to enforce encryption for objects that are uploaded to S3 and there were two main theories on what we need to do to ensure that new objects get encrypted:

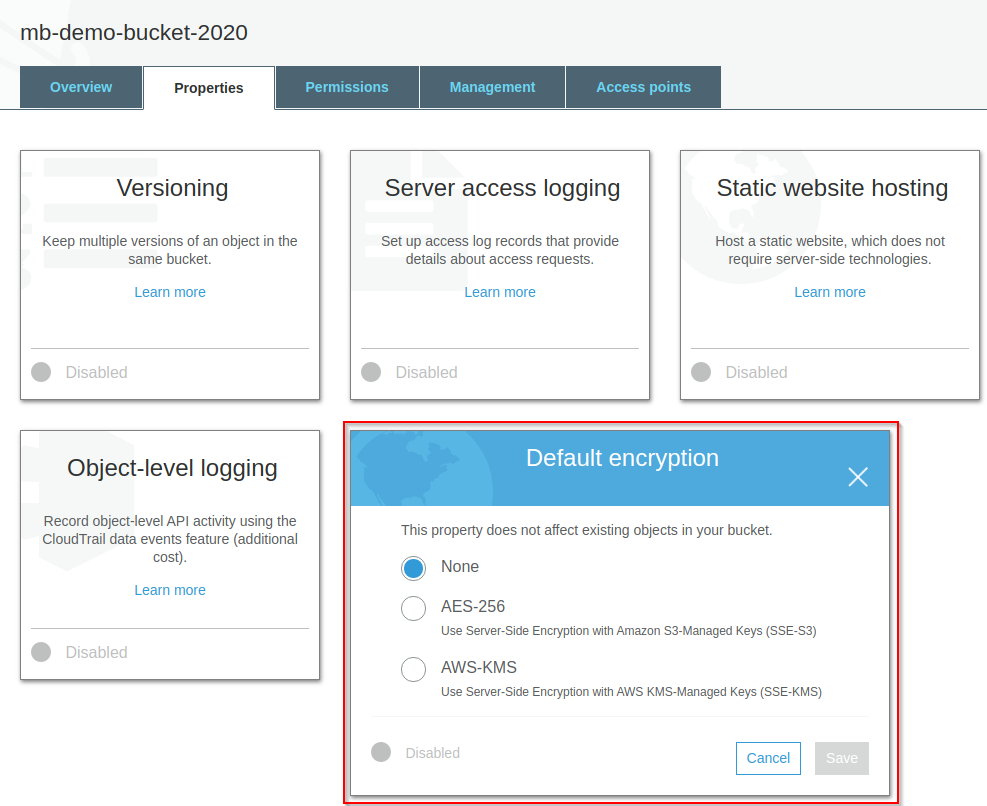

- Enable the default encryption on the bucket

- Enable the default encryption on the bucket and block unencrypted uploads through a bucket policy

In the past conventional wisdom has been that, in order to enforce that objects in S3 get encrypted, you need a bucket policy, which explicitly denies PutObject calls which don’t set the relevant encryption headers.

Some time ago (we couldn’t find the announcement) S3 added the option to encrypt objects in a bucket by default.

We weren’t sure if that setting was sufficient to guarantee every new object is encrypted, because we thought you might be able to explicitly say “No encryption please!” on the upload.

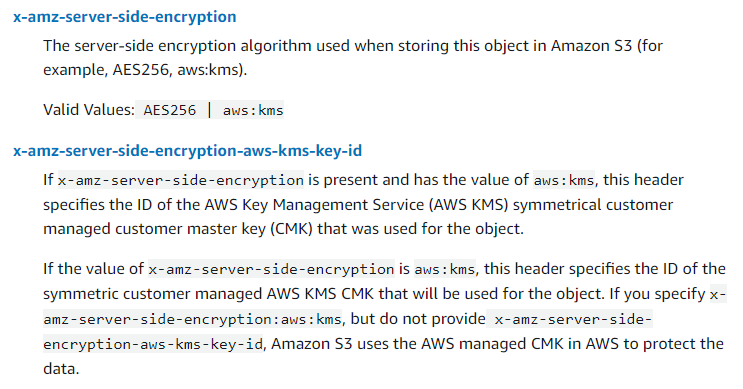

To settle this, we took a look at the PutObject api call documentation and found this on the encryption headers:

Essentially there is no way to explicitly say “I don’t want to encrypt this object”, because that’s already the default behavior of the API-call.

This means once you enable default encryption on your bucket, the objects will be encrypted in some way.

It doesn’t necessarily mean it’s the way you chose.

If I set the default encryption of my bucket to use a KMS-Key (SSE-KMS) I can still use the x-amz-server-side-encryption = AWS256 header to change the encryption of the object to S3 managed encryption (SSE-S3), which - depending on your compliance requirements - may be a problem.

Let’s quickly recap the different kinds of server side encryption options in S3 before talking about how to solve that particular issue:

- SSE-S3 uses the symmetric AES256 encryption to encrypt your objects and the key management is handled by S3. You get no visibility into and no control over the key - this is basically a “yes, my objects are encrypted at rest” compliance check, which offers little additional security in terms of access management.

- SSE-KMS uses the same underlying encryption algorithms as SSE-S3, but it uses a customer managed key in KMS to create data keys, which means you get additional visibility into the key management and more control about who is able to decrypt your data - this is our personal favorite.

- SSE-C allows you to specify an encryption key to encrypt each object, but you’re required to do the key management, i.e. S3 won’t store your key and only perform encryption and decryption of objects. You probably only want to do this if you’re really paranoid or in a highly regulated environment, it’s a lot of work and easy to screw up.

After this primer we can talk about our change-of-encryption-problem. Personally I’m a fan of clients not having to know about or not having to worry about the kind of encryption we apply in S3 in order to upload objects, so we could just block any request with the bucket policy that has the encryption headers present. That would punish clients who are specifying the correct encryption key though, which I also don’t like. Ideally the bucket policy only denies requests with incorrect encryption configurations. Let’s try to build something like that.

Since you can’t use SSE-C for default encryption (S3 can’t know the key if it’s provided by the user), we only need to consider SSE-S3 and SSE-KMS.

Let’s start with the easy case: SSE-S3.

When we want to enforce the use of the SSE-S3 encryption option, we need to deny all requests that have the x-amz-server-side-encryption = aws:kms header set.

A rule for that may look like this (You need to update $BucketName):

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::$BucketName/*",

"Condition": {

"Null": {

"s3:x-amz-server-side-encryption": "false"

},

"StringNotEqualsIfExists": {

"s3:x-amz-server-side-encryption": "AES256"

}

}

},

{

"Sid": "AllowSSLRequestsOnly",

"Action": "s3:*",

"Effect": "Deny",

"Resource": ["arn:aws:s3:::$BucketName", "arn:aws:s3:::$BucketName/*"],

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

},

"Principal": "*"

}

]

}

It gets more interesting with the SSE-KMS case.

Here we need to deny all requests that use the wrong encryption type, i.e. x-amz-server-side-encryption = AWS256 or an incorrect KMS key, i.e. the value of x-amz-server-side-encryption-aws-kms-key-id.

A rule for that looks like this (You need to replace $BucketName, $Region, $Accountid and $KeyId):

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::$BucketName/*",

"Condition": {

"StringNotEqualsIfExists": {

"s3:x-amz-server-side-encryption": "aws:kms"

},

"Null": {

"s3:x-amz-server-side-encryption": "false"

}

}

},

{

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::$BucketName/*",

"Condition": {

"StringNotEqualsIfExists": {

"s3:x-amz-server-side-encryption-aws-kms-key-id": "arn:aws:kms:$Region:$AccountId:key/$KeyId"

}

}

},

{

"Sid": "AllowSSLRequestsOnly",

"Action": "s3:*",

"Effect": "Deny",

"Resource": ["arn:aws:s3:::$BucketName", "arn:aws:s3:::$BucketName/*"],

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

},

"Principal": "*"

}

]

}

We also built a small CDK app to verify this works as intended. Essentially it sets up two buckets, one of them with SSE-S3 and the other with SSE-KMS. The first bucket has the first policy we mentioned above and the other the second. There is also a lambda function with a few unit tests written in python that test seven different scenarios to verify the policies indeed work as intended. You can check all of this out in this Github Repository if you’d like to try that for yourself.

def test_put_without_encryption_to_sse_s3_bucket_should_work(self):

"""Default encryption should take over when we specify nothing"""

def test_put_without_encryption_to_sse_kms_bucket_should_work(self):

"""Default encryption should take over when we specify nothing"""

def test_put_with_explicit_encryption_to_sse_s3_bucket_should_work(self):

"""Explicitly setting the correct encryption type should work"""

def test_put_with_explicit_encryption_to_sse_kms_bucket_should_work(self):

"""Explicitly setting the correct encryption type should work"""

def test_sse_kms_to_sse_s3_fails(self):

"""

Assert that we get an error when we try to store SSE-KMS encrypted

objects in the bucket that is SSE-S3 encrypted.

"""

def test_sse_s3_to_sse_kms_fails(self):

"""

Assert that we get an error when we try to store SSE-S3 encrypted

objects in the bucket that is SSE-KMS encrypted.

"""

def test_wrong_kms_key_fails(self):

"""

Assert that a put request to the SSE-KMS encrypted bucket with a

different KMS key fails.

"""

Conclusion

If your goal is to ensure that your objects are encrypted at rest in S3 at all, the S3 default encryption is the right tool for the job. When you need to ensure that a specific encryption method is used, S3 default encryption in combination with a bucket policy is what helps you achieve that goal. In this post we’ve given you the tools to achieve that.

Special thank you to Bulelani and his colleagues from AWS support who helped us out with debugging the policies.

We hope you enjoyed reading this article. For feedback, questions and anything else you might want to share, feel free to reach out to us on twitter (@Maurice_Brg & @Megaproaktiv) or any of the other networks listed in the cards below.